Noticing trends and patterns in data are key aspects of optimizing marketing performance, but large, complex data sets can make that part of the job challenging. This is especially true when it comes to analyzing keywords and search terms in paid search advertising, where there might be hundreds of thousands– if not millions– of long-tail keywords and search term permutations.

To make things worse, the keyword-level metrics in these large data sets are often too small and insignificant to draw any meaningful insights to act upon. For example, you might have a target CPA of $250 for an account, but thousands of search terms that have spent less than $2.00 each.

So what’s a digital marketer to do?

To get actionable insights from a data set spread over many different keywords, you need to aggregate similar keywords. Aggregation allows you to maintain the signal and integrity of the underlying data while arriving at a more significant sample size on which to base your decisions.

What Is An N-Gram?

Before we dive into our example, let’s make sure we know what an n-gram is.

An n-gram is a fixed-length neighboring sequence of n items in a text or speech.

For example, given n = 3, the text “live in a van down by the river,” the 3-gram would be “live in a,” “in a van,” “a van down,” “van down by,” “down by the,” and “by the river.” In this example, the n-gram sequencing was done manually, but this process can be done much more efficiently using tools like Power Query in Excel or from Google Ads scripts.

How does this apply to SEM though? In SEM, n-gram analysis is useful for accomplishing three main activities for large search term data sets with sparse data at the search term level:

- Finding new keyword themes or permutations to add to your account.

- Determining which keywords should be added as negative keywords.

- Applying bid adjustments to a group of keywords based on the collective underlying search term performance data.

An Example of N-Gram Analysis of Aggregate Search Terms

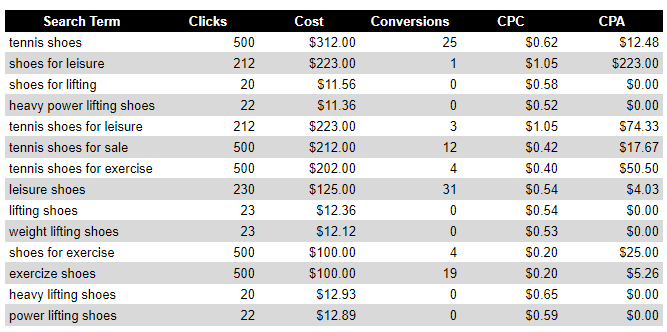

To better understand the value of n-gram analysis, let’s walk through a hypothetical search term data set for an e-commerce company that sells various types of shoes online. Their goal is to sell 90 pairs of shoes per month at a maximum CPA of $25. Below is a snapshot of some of the search terms and associated performance data for this company over the past few months:

Immediately, there are search terms that should be either added as negative exact match keywords or receive lower bids due to the CPA relative to goal. For example, “shoes for leisure,” “tennis shoes for exercise,” and “tennis shoes for leisure” all require adjustment because their CPAs are much higher than the target CPA of $25. If we simply added these search terms as negative exact match keywords, this would result in 92 conversions at a $10.02 CPA if applied retroactively. Success!

But we can do better than that, through the power of n-grams.

Optimizing With Granular Data

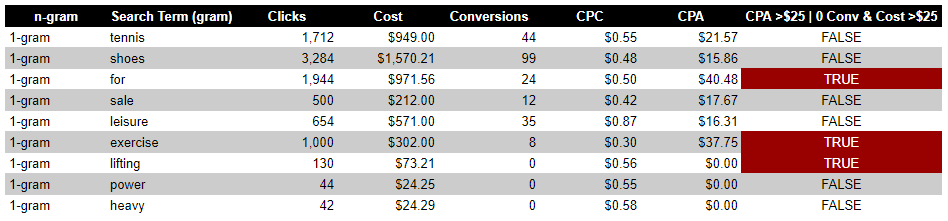

At the simplest level, we have 1-gram data, as seen below.

Individually, the search terms including the word “lifting” had less than $13 of spend each, but in aggregate we can see that the search terms including “lifting” have spent $73 and not led to a single conversion. Depending on your threshold for taking action, this could warrant bid optimization, adding negative keywords, or testing new ad copy or landing pages for this specific theme to see if there are conversion rate lift opportunities.

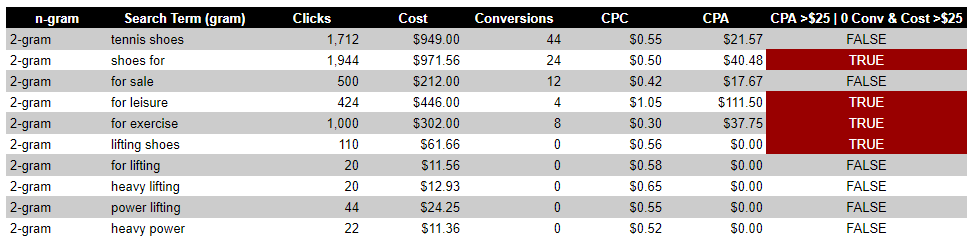

Looking at the 1-gram data above, another group could be formed from search terms that include the word “for.” But by looking at the 2-gram data (as seen below), we can see the difference between search terms that contain the phrase “shoes for” and not “for sale.” This insight might cause us to break these terms out into separate ad groups that get their own bids or tailored ads to maximize volume on the “for sale” terms and improve efficiency on the “shoes for” terms.

Running N-Gram Analysis At Scale

While it may be easy to spot these new opportunities and optimizations in a snapshot view for smaller accounts, it’s much harder (if not impossible) to manually identify these patterns and trends when there are thousands or millions of search terms. Instead, this process can be done with scripts or Power Query. You can use this simple script from Search Engine Land to run an n-gram analysis.

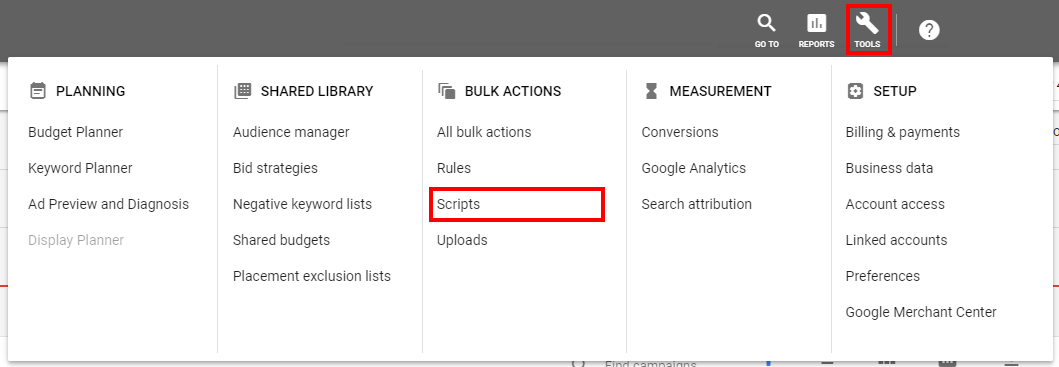

To run this script:

1. Click into Tools > Scripts

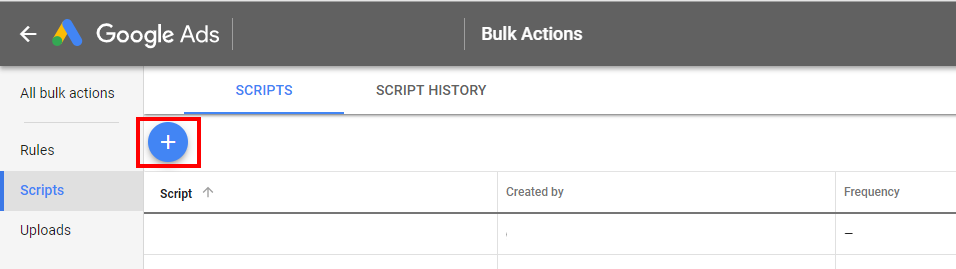

2. Create a new script by pressing the (+) button

3. Name the script and copy-paste the script

4. Create a Google Sheet link with “Anyone with the link can edit” access

5. Change the spreadsheet URL to your Google Sheet URL

6. Save and authorize the script to run

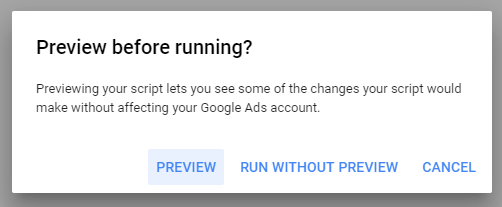

7. Hit Run, then Run Without Preview. Wait for the output to appear in your Google Sheet

You can use n-gram analysis to derive unique and powerful insights from aggregated search data. Most importantly, you’ll be able to work with large sets of granular data to better optimize your paid search account and get maximum performance.