In the world of marketing, data governance is never the focus from a failing analytics perspective. As Wpromote’s Director of Digital Analysis, I know how important clean and consistent data is to the advanced models and forecasts marketing execs adore, but I’ve also witnessed firsthand the resistance to spending time and effort on the bedrock data that makes those models work.

Execs are understandably more excited about the shiny new tools and their potential ROI. But if you bypass the foundational step of consistently labeling, managing, and updating your data, your business can waste hundreds of hours and lose millions of dollars on models that will never work.

Seriously. Millions.

Garbage In, Garbage Out: Why Bad Data Governance Leaves Your Advanced Data Modeling Outcomes A Mess

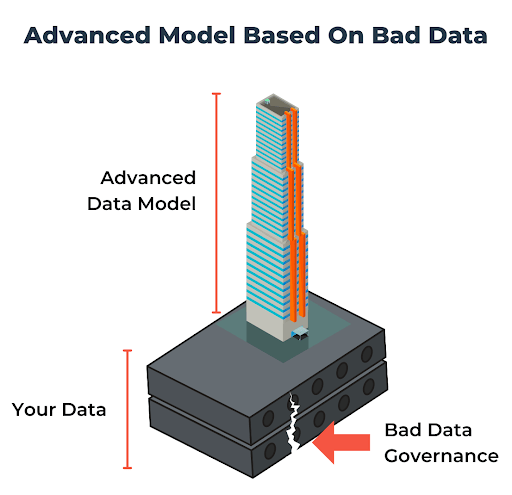

So let’s get the bad news out of the way: if your brand’s sparkling new, fresh out of the box, advanced marketing model is built on bad data… it’s trash.

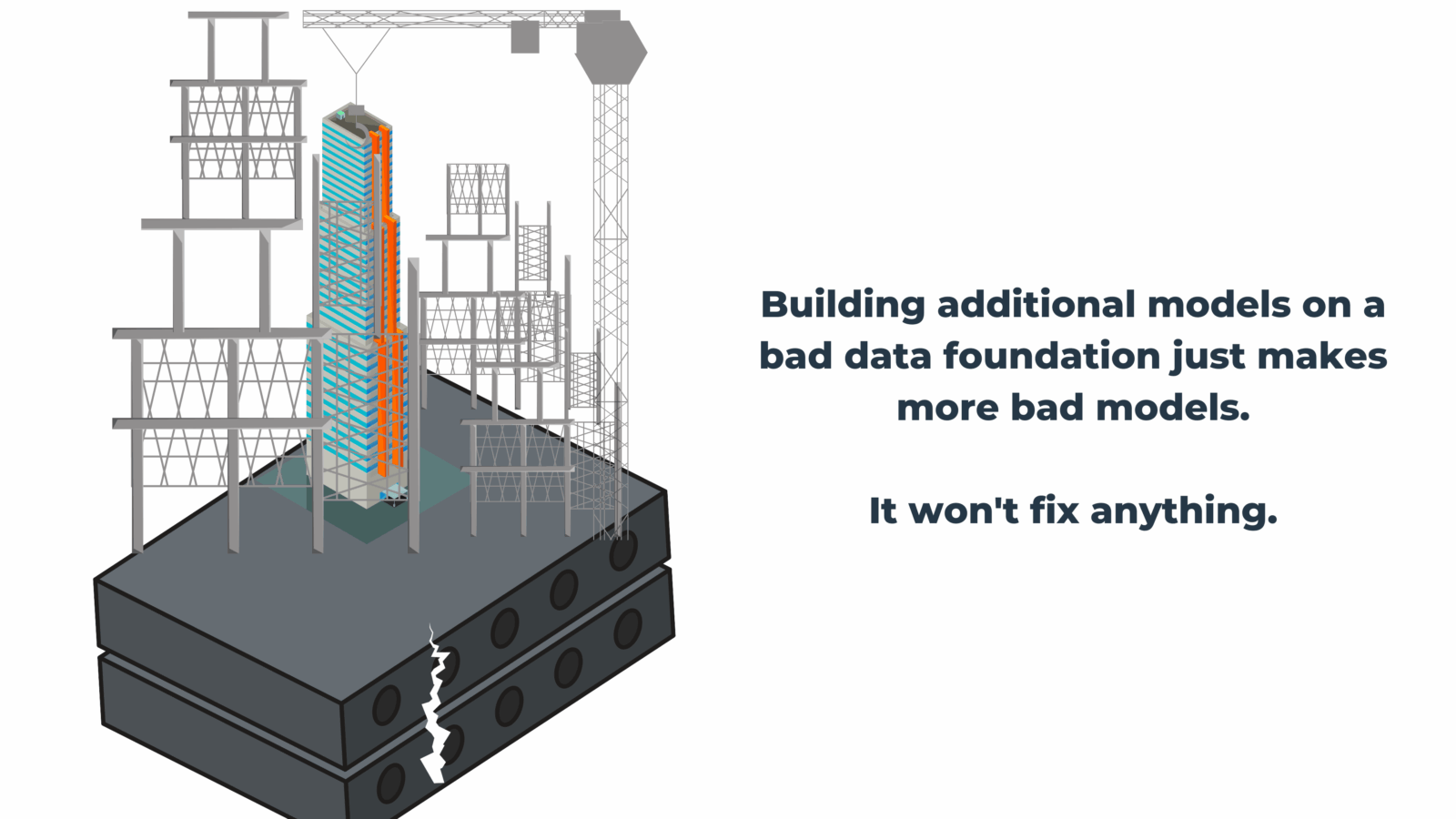

If you’re lucky, it might work for a little while, but you’ll never be able to get it to work long-term. Because it’s been built and trained on bad inputs. And too often the way people try to “fix” a broken model is by building something even more complex. If you haven’t fixed the initial flaws in the data, it’s still broken.

Think about it like a skyscraper: the cool glamorous tower part that everyone is excited about is your advanced model. But if your tower is built on a bad or flawed foundation, you’re going to have some major problems (just ask the residents at 432 Park Ave).

Building a bunch of intricate scaffolding to prop up your tower without addressing what’s broken in the foundation might make things better for a time, but it isn’t addressing the fundamental problem. If the data it’s all built on is still bad, your beautiful model could eventually go the way of the London Bridge.

All Fall Down: The Bias-Variance Tradeoff and Other Tales of Bad Data Governance

The magnetic pull of a model-centric (as opposed to a data-first) approach to advanced data analysis is hard to ignore. But pioneering experts in the field like Andrew Ng are pushing data scientists to resist the attraction of building fancy models to fit messy data. One major consideration for any analyst using statistical modeling is the Bias-Variance Tradeoff.

There are two major errors associated with the Bias-Variance Tradeoff:

Overfitting: High variance, low bias

Your model is extra sensitive and ends up focusing on random noise. It’s not always immediately obvious that something is wrong because the model is capable of producing reliable insights corresponding to specific sets of data, but they can’t be accurately applied to future learnings or additional data sets.

Underfitting: Low variance, high bias

When you don’t have enough signal from your data, your model misses relevant patterns in the data, failing to accurately predict outcomes.

If you’re relying on advanced data modeling, you need to hire people with a rare combination of talents. But with a data-centric approach, you might not actually need a unicorn hire that combines digital marketing expertise with statistical modeling and computer science skills.

Using better data means traditional, less complex machine learning models are likely to solve your problems, which means you don’t necessarily need experienced data scientists to do the work. Instead, data analysts can pull valuable insights from these simpler models while learning the nuts and bolts of data science in a (relatively) clean environment.

“When a system isn’t performing well, many teams instinctually try to improve the code. But for many practical applications, it’s more effective instead to focus on improving the data.”

But that’s not the only part of your strategy you should reconsider. Organizations that throw advanced models like neural networks at problems with high bias should stop and evaluate their approach. They’re in danger of using some very expensive bandaids on a wound that won’t ever heal without going back to the very beginning: the data.

And all of this is preventable.

That’s why every client using Growth Planner, our high velocity mixed media model in Polaris, is closely paired with our data governance offering. It’s not because we’re mean, it’s because we know that Growth Planner (or any model for that matter) won’t work if it’s based on bad data. It’s how we know that the insights from Growth Planner are accurate, actionable, and drive actual value. We practice what we preach.

More Value, Lower Cost: Applying The 80/20 Principle to Data Analysis

There’s an old truism in the data science world: 80% of your time and effort should be spent cleaning the data and 20% modeling it.

The thing with those old sayings? They’re often true.

By establishing mature data governance best practices, your data scientists can build advanced models that work and provide valuable insights that drive business growth.

Enterprises can save millions of dollars by crossing their t’s and dotting their i’s with data governance that ensures the foundation of your advanced analysis is sound because it’s built on the right taxonomies, it’s clean, and it’s complete.

But data governance is not just about saving money you’d otherwise be throwing away. It’s about profitable growth. It might not be exhilarating to talk about the minutiae of how your business treats state designations (do you use the full state name or the abbreviation?), but it’s the only way you’ll be able to build and deploy advanced models that give your business a competitive edge through accurate analysis, insights, and predictions.

Data Governance Best Practices: 4 Ways Data Governance Unlocks The Competitive Advantage

When it comes down to brass tacks, data governance is just good business. Firms that adopt data governance best practices will win in the coming age of AI. Companies that neglect to establish these processes will be outmaneuvered.

Here are 4 advantages you can unlock with a sturdy and reliable data foundation:

- Optimize Your Time: With strong data governance in place, data analysts can spend more time building models and less time cleaning up what’s not working after the fact. It also sets you up to avoid wasting time running sophisticated models only to find that your results are worthless.

- Spend Less, Get More Value: The better your data is, the less sophisticated your algorithms need to be. By doing the essential legwork to get your data house in order before building your model, you’ll be able to use simpler models that require less investment but produce exceptional results.

- Democratize Your Data Analysis: When you’re running fewer baroque models, you won’t have to hire an entire team of data scientists armed with PHDs to understand the outputs. You can let less experienced analysts handle the job and reliably provide quality insights.

- Make Better Marketing Decisions: When you optimize your time, spend less on tech, and make your data analysis more accessible, you’re already at a tremendous competitive advantage from a cost savings perspective. But you also have the opportunity to build better models, beautiful models, models that accurately predict and forecast what you need to do next or where you need to spend, or which channels will see the best ROI. Models that work.

And take it from a data scientist: the sexiest of advanced models are the kind built on solid ground because they’re based on solid data.