There are a number of technical SEO issues that can hinder your site’s full ranking potential in the search engines, but this list is comprised of the top 10 most frequent mistakes that I come across on a daily basis. Some of these problems are harder to address than others, but if you can get all of these ducks in a row, you have a much better chance of beating your competitors in the organic search landscape.

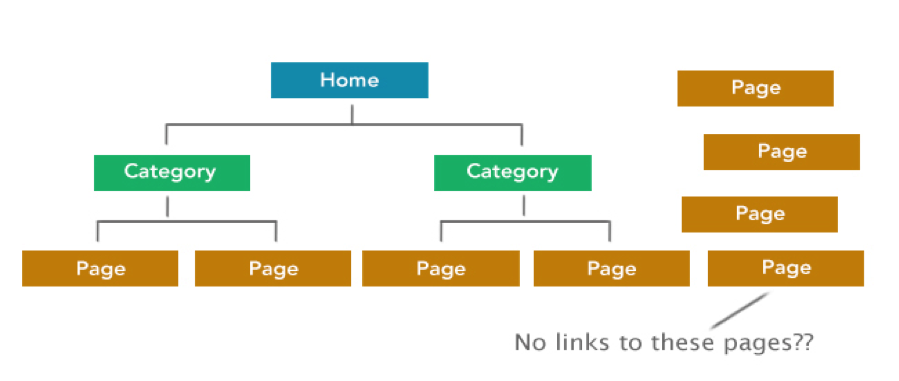

1.Lousy Site Structure

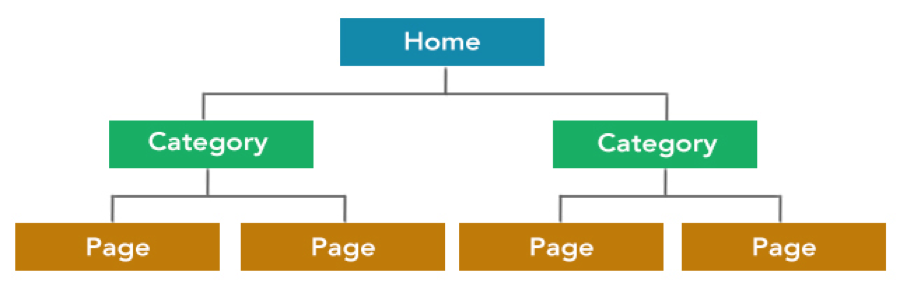

Why It’s Bad: Aside from making it easier for search engines to index your site, having a well thought-out site structure that includes clear directory paths and an intuitive internal linking structure is highly recommended. Google is continually adding elements to their algorithm that judge “usability” for your audience.

How To Fix It: Whether your site has 50 or 5,000 pages, it’s important to organize the information into categories or sub-folders. All navigational menus should be methodically constructed, and you should never be more than three clicks away from any other page on your site. This will lead to a user-friendly website that is easily crawled by search engines.

How It’s Changing: This is something that will always be important to your site. As Google’s algorithm evolves, it will be better able to determine how your site performs from a UX standpoint. For that reason, it’s crucial to get organized sooner rather than later.

2. Unfriendly URLs

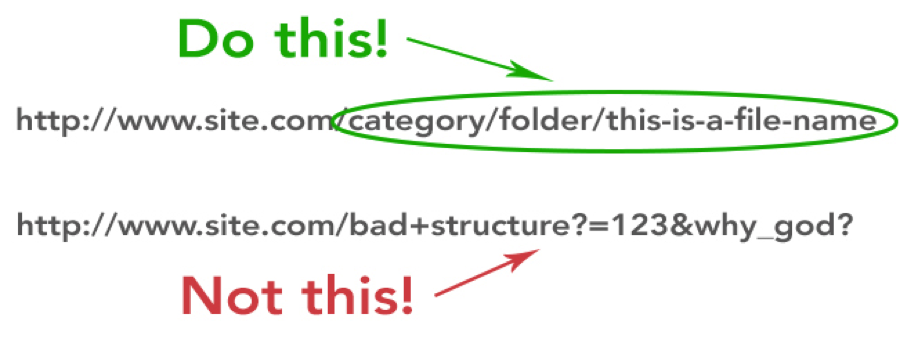

Why It’s Bad: Unfriendly URLs are those that are dynamically-generated or contain special characters like question marks and ampersands. SEO friendly URLs, on the other hand, contain keywords and are easy to read and understand for both search engines and users. Since it’s important to make your site as easy to index and rank as possible, it’s crucial to have SEO friendly URLs.

How To Fix It: This is often a complicated problem to fix if your URLs are already bad. For that reason, the best solution for this is to follow the previous step and make sure your site structure is as intuitive as possible in the design stage, when you first create your site. If the damage is already done, don’t worry, there are still some alternatives. Depending on your site’s CMS, you may be able to make “link templates” that can be applied across your entire site. Otherwise, your developer may be able to create dynamic URL rewrites.

How It’s Changing: Just like site structure, this is will always be important for your site performance and rankings, and should only become more so in future algorithm updates.

3. Not Using Title Tags Correctly

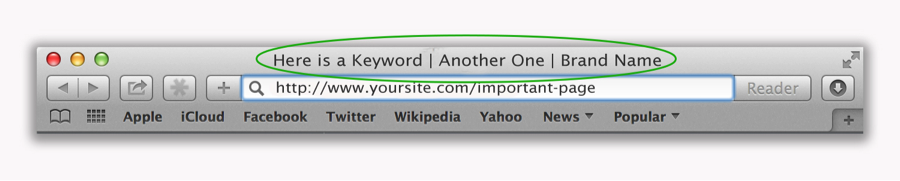

Why It’s Bad: Title tags are probably the biggest onsite factor that can influence search engine rankings. By putting your target keywords into the title tag, you’re essentially letting Google (and users) know that those terms relate to the content on that page. Many sites fail to take advantage of this and don’t rank as high as they could as a result.

Conversely, many sites try optimizing pages for keywords that aren’t related to their content. Google’s algorithm has gotten increasingly better at distinguishing “relevancy”. As a result, it’s highly unlikely your FAQ page, for example, will rank well for one of your important search terms.

How To Fix It: It’s important to do extensive keyword research before optimizing title tags. Once you know what terms you want to rank for, you can find the corresponding pages on your site where you can target them in the title tag. If you don’t have content that relates to those terms, but you think your site should rank for them, it’s best to create new, relevant pages instead of trying to target keywords on existing pages that don’t relate those terms. In this way, you’re ensuring that the content of your site is matching the searcher’s intent and providing the best possible user experience.

How It’s Changing: Since title tags have always been a major ranking factor, they have been a constant victim of black-hat SEO tactics like “keyword stuffing”. As a result, Google has gotten efficient at recognizing spammy tactics, and fine-tuned the weight that they give them. While Google has recently made some minor tweaks to how they view title tags in the search results, it’s clear that this is still a major signal for keyword rankings.

4. Sites/Pages That Load Too Slowly

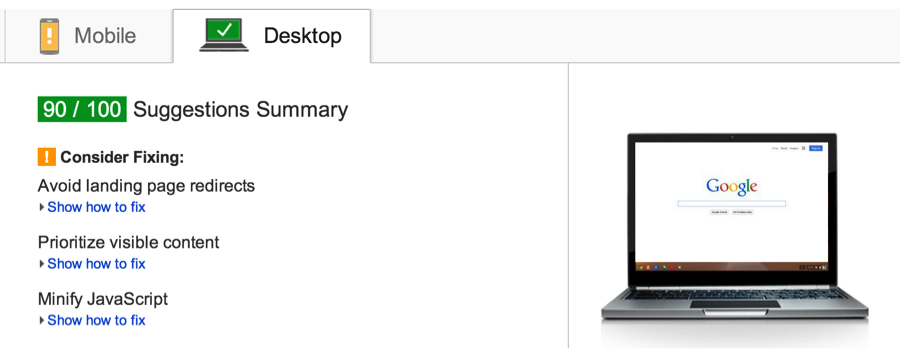

Why It’s Bad: How fast your pages load is a factor that directly contributes to rankings. If this wasn’t enough of a reason to optimize for it, it can also affect bounce rates, conversions, and user experience.

How To Fix It: There are many ways to speed up your webpages, like offloading excess JavaScript and CSS, optimizing your images, and leveraging browser caching. The best method is to visit Google’s PageSpeed Insights tool and analyze your site from both a desktop and mobile perspective.

How It’s Changing: This has become increasingly important as sites on the web become more complex. Google officially added this to their algorithm in 2010, and it continues to be a significant ranking signal to this day.

5. Duplicate Content

Why It’s Bad: It is considered duplicate content if you’re feeding the same page content to the search engine and users from different URLs. Among other reasons, it’s a problem because the search engines have trouble deciding which page to index and direct their link metrics to (trust, authority, link juice, etc.).

How To Fix It: To fix this problem you need to pick a URL that you’d ultimately like the content to rank for and utilize the rel=canonical tag on the pages that you don’t need indexed. This tag tells the search engine that, although there may be multiple pages with that content on them, there is one in particular that you’d like them to index.

How It’s Changing: While Google is getting better and better at determining which pages to index/rank, it’s still important to make sure your duplicate content issues are sorted out in order to gain the most “trust” for your site.

6. Poor Internal Linking

Why It’s Bad: Internal links are links on your site that point to other pages on your site. They’re important to pass authority through targeted anchor text, and are also helpful for search engines and users trying to navigate around your site.

How To Fix It: While this is helpful to rankings, it’s critical not to be to “spammy” in your approach: only link to relevant pages that will help the user experience.

How It’s Changing: Since it’s been so heavily spammed, you have to be increasingly careful how you utilize this, especially when using exact match anchor text.

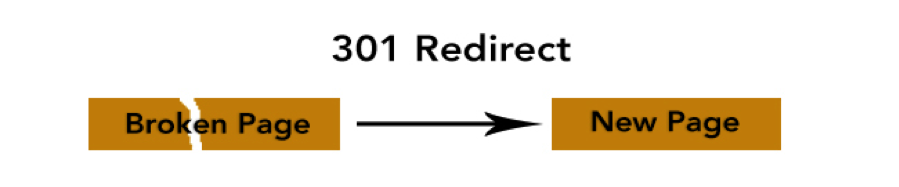

7. Not Utilizing 301 Redirects

Why It’s Bad: 301 redirects are used when someone is linking to a page on your site that is broken or no longer exists. If someone visits your site through that link, they are then shown a relevant page on your site, instead of the broken one. This helps user experience and can also improve your bounce rate.

How To Fix It: You can create a profile with Google Webmaster Tools and find a list of all the pages on your site that Google tried to crawl unsuccessfully. In many cases, it’s worthwhile to redirect those URLs to other relevant pages on your site. It’s important to note, however, that too many redirects can hurt your site performance. It’s ok to sometimes let pages 404 if that content no longer exists and there are no authoritative links pointing to the old URL.

How It’s Changing: Like the majority of issues on this list, this also speaks to the usability of your site, so as Google’s algorithm evolves it should increase in importance.

8. Non-Responsive Website Design

Why It’s Bad: Responsive websites are those that automatically resize to fit the device that a particular user is on. With mobile products taking over more and more of the search market share, it stands to reason that your site should perform well on these devices.

How To Fix It: While this is a pretty substantial development task for most businesses, it will save time in the long run by eliminating the need to update (or build) a completely separate mobile site.

How It’s Changing: Google recently announced that older sites should not “be complacent”, and stressed the importance of updating the site often with fresh content. With more people viewing the web on mobile devices, it’s crucial that your site looks good and functions properly on them.

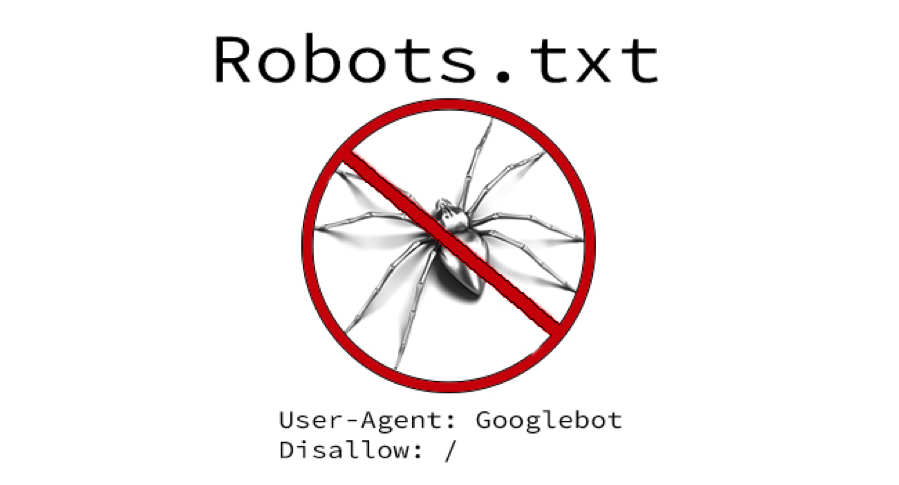

9. Robots.txt File Blocking Pages Or Images From Being Crawled

Why It’s Bad: The robots.txt file exists for the sole reason of telling search engines which pages on your site shouldn’t be crawled. Generally, it’s best to only disallow engines from visiting areas on your site that don’t have any value in the search results. A good example of this would be a login page. However, some mistrusting webmasters block them from crawling whole directories, JavaScript, CSS, and even their images. Since image search gets a fairly large volume of traffic, you could be missing out on a number of qualified leads by blocking these sorts of files.

It’s also important to remember that if Google isn’t allowed to crawl a page, it can’t tell if that page passes authority to other pages on your site. So if you’re internally linking from a blocked page, that link will not pass any juice.

How To Fix It: This is the easiest one to fix. Simply don’t block engines from crawling anything but protected areas of the site unless you have a very good reason.

How It’s Changing: This has significantly dropped in value after Google’s 2013 image search update, which brought high-resolution pictures into the search results. However, since the amount of users searching for images continues to increase, it’s still worth allowing them to be crawled in hopes of grabbing some qualified leads from that channel.

10. Soft 404 Errors & 302 Redirects

Why It’s Bad: Soft 404s tell search engines that a page exists on your site when it doesn’t. 302 redirects tell search engines that a page on your site is “moved temporarily.” Both of these can make it harder for search engines to properly crawl and index your site.

How To Fix It: Crawl your entire site with a tool like Screaming Frog and then sort by “302.” Then, you’ll be able to decide if the page should, in fact, be redirected permanently with a 301. For soft 404s, you can simply create your own custom 404 error page and make that show up in any instance of a 404 or broken URL.

How it’s changing: These are more “evergreen” issues that will occur as long as the Internet exists in its current state. However, since these can also be considered usability problems, they could gain importance in future iterations of Google’s algorithm.

Responses